So you just got started with serverless or S3 and want to know how to upload object programmatically with nodejs? Well here's an in-depth analysis about it.

This article is inspired from my YouTube tutorial - The Ultimate S3 & Nodejs Guide.

First, we need to understand the various method through which we can upload file to S3 bucket

- PutObject

- Pre-Signed Url

- Multipart Upload

For simplicity, I'll only talk about first one and we can discuss the other ones in another blog.

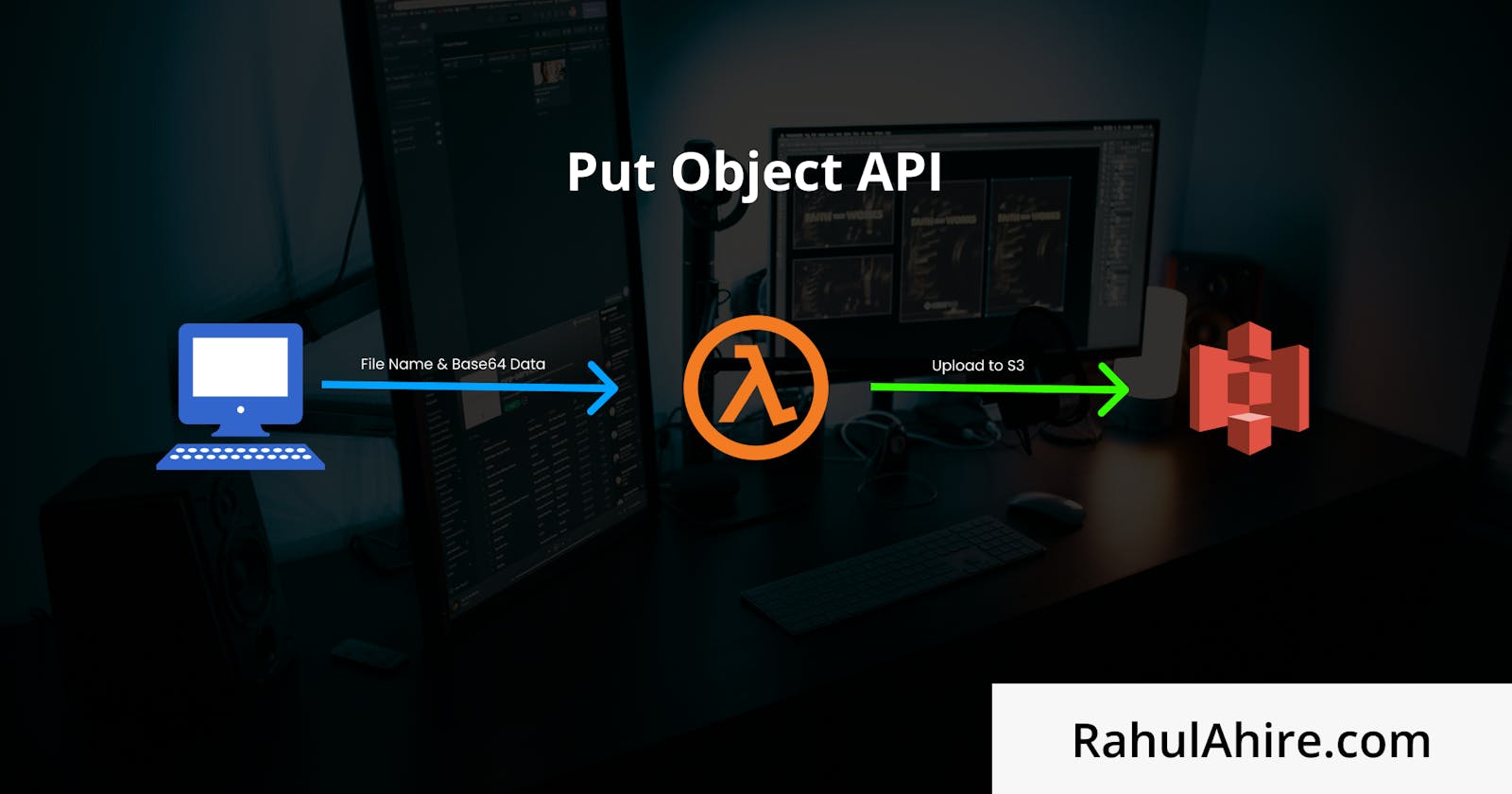

putObject physically takes the files in binary encoding and then tries to upload it. Let's say we want to make an API that can upload the profile pic image to S3 bucket. In this case, there involves two part, first one being frontend and second one being backend.

For frontend we'll keep it simple. No Reactjs, nothing and for backend we'll use api-gateway + Lambda with serverless framework to set up our backend.

Let's focus of easier on frontend HTML + JavaScript and here's code below

<input type="file" id="putObject">

<script src="./frontendPutObject.js"></script>

<script src="https://cdnjs.cloudflare.com/ajax/libs/axios/0.21.1/axios.min.js" crossorigin="anonymous"></script>

document.getElementById('putObjectBtn').addEventListener('click', () => {

const putObject_fileInput = document.getElementById('putObject');

const file = putObject_fileInput.files[0];

const fileName = file.name;

const reader = new FileReader();

const url = `https://68qb5fre0e.execute-api.ap-south-1.amazonaws.com/dev/putObject`;

const config = {

onUploadProgress: (progressEvent) =>

console.log(parseInt(Math.round(progressEvent.loaded / progressEvent.total * 100)))

};

reader.onloadend = () => {

const base64String = reader.result.split('base64,')[1];

const dataInfo = {

fileName: fileName,

base64String: base64String

};

axios

.post(url, dataInfo, config)

.then((r) => {

console.log(r);

})

.catch((err) => {

console.error(err);

});

};

reader.readAsDataURL(file);

});

If you look carefully at the code, you'll notice that that we are taking the file and then converting it into base64 encoding which is what we need to send to our Lambda handler.

In this case, I'll assume you know how to create rest API with serverless framework or with any other deployment framework. If you don't know it, here's my video tutorial on Serverless Framework Crash Course. With that said, let see how does the lambda handler looks like.

const AWS = require('aws-sdk');

const s3 = new AWS.S3({ signatureVersion: 'v4', region: 'ap-south-1' });

exports.handler = async (event) => {

const body = JSON.parse(event.body);

const fileName = body.fileName;

const base64String = body.base64String;

const buffer = Buffer.from(base64String, 'base64');

try {

const params = {

Body: buffer,

Bucket: process.env.bucketName,

Key: fileName,

};

await s3.putObject(params).promise();

return {

statusCode: 200,

headers: {

'Access-Control-Allow-Origin': '*',

'Access-Control-Allow-Credentials': true

},

body: `file Uploaded`

};

} catch (err) {

console.log(err);

return {

statusCode: 500,

headers: {

'Access-Control-Allow-Origin': '*',

'Access-Control-Allow-Credentials': true

},

body: JSON.stringify(err)

};

}

};

In this case we are using putObject API to upload our file to S3. As you can the putObject API only accepts the data in binary format so, we had to convert base64 to binary.

const buffer = Buffer.from(base64String, 'base64');

And, that's how you can upload file to S3. But, a big but, There's a limit to this method that API gateway has time limit of just 30 second and have max payload size of 10 MB. What that means is, if your file upload takes longer than 30s then the upload process will stop. Not the ideal case to upload large file object. In that case, you should use Pre-signed URL or Multipart Upload.

With that said, Thanks for reading this article and I'll see you next time.

Website - RahulAhire.com